What’s in a data engineer?

A data engineer is someone applying software engineering practices to data - storing, moving and modeling data in a production capacity. Historically this has involved stitching together many systems, writing code, working with distributed infrastructure depending on scale, and making it repeatable and resilient.

They typically work at companies that either have significant quantities of data or high demand for using that data for both operational and analytics purposes

The shift to the warehouse

For the purposes of this piece, we’ll use Snowflake to represent the cloud data warehouse offerings. They seem to have the clearest strategy & the most success when it comes to moving workloads into the warehouse.

Warehouse-native data engineering

A modern data engineering pattern has evolved for workloads that are analytical in nature, driven by new companies, a scarcity of data engineering skills, and access to new tools. While there exist many enterprises that still run their data engineering practices out of Hadoop and Spark, the ELT pattern supposed by Fivetran/dbt/Snowflake reigns supreme as the most popular way to get data into a new warehouse and then mold it with SQL to your liking for the purpose of analytics.

This has been encouraged (obviously) by Snowflake. Fivetran and dbt were the 2022 and 2023 data integration partners of the year for Snowflake respectively, and for good reason: there’s a ton of transformation now happening in the warehouse. This modern stack simplifies transformation in the warehouse, and extends access to that transformation to a less technical user, at the expense of Snowflake credits and scaling limitations.

Traditional data engineering

There exist workloads beyond in-warehouse transformations, that I would call “traditional data engineering”. These workloads involve use cases beyond the SQL transformation, which is most often the best fit for a warehouse.

These are compute intensive, judging by the kind of technologies that data engineers with big data typically use: Spark, Hive, Docker, Kubernetes, Flink, Kafka, Dask, Ray, Presto, Iceberg, Cassandra, and others. They feature heavy transformations written in code, operational workflows, and reactive data movement such as events or streaming. In short: they’re heavy, they are complex, and they are a major growth opportunity for Snowflake now that the analytical workloads are well-in-hand.

There’s great opportunity for Snowflake in traditional data engineering, as it can drive huge consumption. The introduction of Snowpark, which encompasses support for Python, Java, Scala, and now even containers, appears to be Snowflake’s native answer to non-SQL workloads like ML, data quality, streaming, etc.

With Snowpark, now you can run your custom code in the warehouse - don’t land your data elsewhere, just put it all in Snowflake! If you bring your transformations to Snowflake, will they be cheaper than wherever you are running them now? 🤷🏼 Maybe, and this appears to be the story that Snowflake is telling, particularly with Databricks in mind.

There is some reported adoption of Snowpark, but anecdotally, not that many people that I know are using it. Using Snowpark requires that the data exists in the warehouse, which is less likely to happen the more complex the workload (does it use many tools, stitch together multiple data sources, etc.). This aligns with Snowflake’s previous strategy of bringing as much consumption as possible into the walled garden.

The future of data engineering

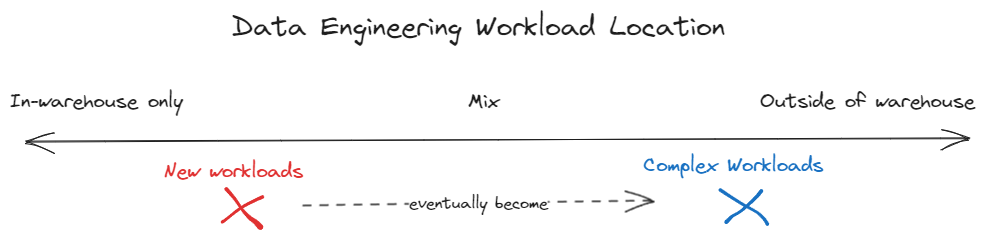

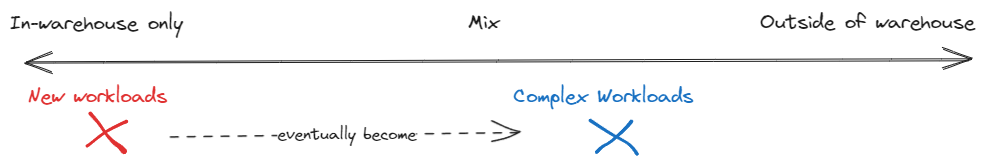

Imagine some spectrum of data engineering workloads, split between those solely in the warehouse, those solely outside of the warehouse, and those in between. How do our data engineering profiles map to this spectrum?

Today the basic, “modern” workloads are mostly being built with the “modern” data engineering pattern referenced above. Think of new companies setting up a warehouse, BI and analytics for small teams, analytics engineers, etc. Eventually, some of those workloads shift into the territory of traditional dara engineering - meaning they add complexity in the form of data sources, SLAs, or requirements that mean the warehouse alone is not enough.

Over time, these workloads will both shift left. It is bound to happen given the tools they’ve built under the Snowpark umbrella and the natural movement of more workloads from on-premise to the cloud. Snowflake will consume even more new workloads with Snowpark, and traditional workloads will continue their move to the cloud as they start to incorporate warehouses to a greater degree (more than just as a source/destination, or for a small number of transformations). Something like this:

Traditional data engineering workloads are the largest and most valuable to vendors, not only for the scale (big companies do lots of data engineering) and the actual consumption that they represent (changing lots of 1s and 0s is worth $$$), but also for the end product that they produce: analytical datasets, aka Snowflake’s bread & butter.

It is most likely that the future state for traditional workloads is living in BOTH in the warehouse and outside of the warehouse. It does not seem reasonable that Snowflake, that previously was a data warehouse, could encompass all data engineering (transformation + operational workflows) within itself effectively. Not only has it not been done before, it seems incredibly unlikely to happen for several reasons:

- Their compute engine is optimized for OLAP

- Snowflake does have a “single platform for analytical and transactional” story called Unistore, but it was announced in June 2022 and a public preview is still coming soon.

- They have Python/Java/Scala support, but it requires that data to be in the warehouse and it can’t federate

- Transformations are compute-intensive and probably very expensive on Snowflake

- Due to Snowflake’s business model, they want all data in the Snowflake walled garden - this is a disincentive to playing nicely with others

What will Snowflake do?

Ultimately, this comes down to a bet by Snowflake.

- Do they think they can build a platform that can capture complex data engineering workflows?

- If they accept the premise that complex workloads will only ever partially live on Snowflake at best, which is more valuable:

- Focusing on data engineering within the Snowflake platform (their same old playbook), at the expense of hybrid workloads

- Encouraging hybrid data engineering at the expense of maximizing data engineering on the Snowflake platform, in order to capture & monetize the associated analytical workloads

The future of data engineering in the warehouse is a bit of a clickbait title, I admit that. It really matters a lot, to two primary groups:

The user

Data warehouses make analytics easier. Imagine if they made data engineering easier, at scale, for even the hardest workloads! Moats would be drained, walls broken down, companies would rise & fall.

The vendors

Snowflake has enjoyed remarkable growth over the past 10 years, culminating in their IPO last year. A continued growth story depends on pulling more traditional data engineering into the warehouse. BigQuery, Redshift, and Databricks (in the opposite sense) rely on similar capabilities.

What will happen?

If the cloud warehousing vendors continue to make big bets on data engineering, operating based on their familiar goals (“get it in the warehouse!”), they are likely to do so by optimizing for workflows that happen totally in the warehouse at the expense of hybrid workloads that are mixed between the warehouse and elsewhere. Snowflake and other vendors are already in danger of becoming a panacea by making a push for data engineering. If they optimize for an in-warehouse singularity - they are almost certain to disrupt themselves by rolling out a substand product in the context of data engineering, while losing focus on their core business of warehousing for analytics.

To be clear, I hope that is not the case. Instead, I hope that the warehouse vendors build modular data engineering that can fit elegantly as a cog in a machine, instead of acting as an opaque machine. There is a world where this is possible, and I hope that we’re living in that timeline. Let’s all pay attention, as the future unfolds in front of us. It is going to be great.

Many thanks to the people that reviewed and provided suggestions for this post, including Sarah Bedell, Chris White, and Bill Palombi.

A new feature hails below!

What was I listening to when writing this?

Dire Straits - Once Upon A Time In The West - Live At Hammersmith Odeon